Apparently, we are already on the threshold of a new era of creativity, when AI and artist become co-authors, complementing each other in the areas and “skills” where they are strongest.

Of all the questions people have been asking in recent weeks and months, this one is not among the most obvious. Meanwhile, the pause caused by the pandemic is good precisely for reflecting on the new realities that have arisen as a result of the active use of digital technology, but about which there has always been little time to reflect in the normal situation.

The influence of new technologies on the nature of creative processes

New digital technologies, in particular artificial intelligence, are radically changing the nature not only of “traditional” technical professions, but have also penetrated into the artistic environment, affecting creative processes, and even a phenomenon called digital art has emerged.

They have already begun to play a very important role in creative activities such as music, architecture, fine arts. Without the use of digital processing and computer effects it is impossible to imagine either modern cinema or music. “The computer these days has literally become both the canvas and the brush and the musical instrument. Apparently, the next step in the development of digital art will be the use of the “computer” no longer only as a tool for the realization of human ideas, but as an independent creative entity. This viewpoint has given rise to a new field of artificial intelligence (AI) called computational creativity. [1]

Apparently, we are already on the threshold of a new era of creativity, when AI and artist become co-authors, complementing each other in those areas and “skills” where they are strongest.

At the same time, the development of digital art raises a new question: can AI become not just an artist’s tool, but an independent author?

In order to understand this, let’s look at the existing methods of working with images and try to find out if they can claim to be independent in creativity.

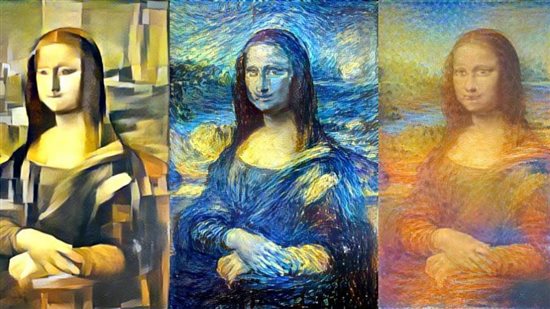

Neural style transfer

Neural style transfer is the simplest and most popular form of using AI in creativity. The model is based on image styling and is based on super-precise neural networks (CNNs). It is embedded in popular mobile applications such as DeepArt and Prisma. The model inputs two images, a styled template and an original. At high stylization, the algorithm optimizes the parameters so that the results of the transformation of the template and the original are as close as possible in the intermediate CNN layers, which are responsible for the meta-image. The stylization factor can be adjusted. The technology makes it possible to successfully imitate the style of Van Gogh, Monet by a library of templates. Each template corresponds to a set of parameters of a pre-trained neural network. The technology makes it possible to use character images in advertising and promotion.

The use of this kind of technology inevitably raises the issue of copyright. The stylization of famous characters raises the question of where the boundary lies between them and the original and how to protect the copyright of the brand bearers. Apparently, AI can solve this issue by creating a collective image based on recognizable brands, supplementing it with “random noise.

The result of processing in the intermediate layers of the neural network is also used in the DeepDream technology from Google in 2015. The result of the application is closest to the style of late Dali and the psychedelic art of the 80s. If a photograph of a real object is fed to the model input, the result is difficult to distinguish from the work of the artist – the technology passes the Turing test. The parameter of the model is the depth of processing – actually the number of the layer of the neural network.

At the moment, the TensorFlow framework allows you to implement the model on the local machine with a few lines of code.

GAN

Modern art based on artificial intelligence technology has attracted media and public attention after the sale of a painting created by French art group Obvious, “Edmond de Bellamy,” at a Christie’s auction on Oct. 25, 2018, for $432,500.” The work is a fuzzy portrait of a man that was printed on a 700 x 700mm canvas. It was created using GAN (Generative Adversarial Network). The technology consists of using two neural networks, one of which generates pseudo-random images from a given set of distributions, and the other (CNN discriminator) determines the plausibility of the image based on the training set. CNN is a binary classifier and tries to answer the question, ‘is the pattern man-made?’ If the answer is negative, the sample is labeled as a failure. The network is trained on a marked set of fake and human-created patterns. Both neural networks are connected in a closed loop.

Most of the pioneers in AI Art use GAN. Among them is Anna Riedler, who believes that these networks produce the most visually interesting results. She created a training set of 10,000 photos of tulips during the season and categorized them by hand. She then used software to create a video showing tulip blooms. Their appearance was determined by bitcoin volatility, and the stripes on the petals reflected the current price of the cryptocurrency. The work draws historical parallels between the “tulip mania” that swept Europe in the 1630s and speculation on cryptocurrencies.

Another extraordinary author using GAN is Helena Sarin; she is an artist in a more traditional sense who uses GAN to transform and enhance her own pencil-on-paper sketches. Sarin exclusively uses CycleGAN, a variant of GAN that performs the transformation of one image into a new one. Essentially, she trains the network to transform images in the form of one dataset to have the textures of another dataset. For example, she translates her photos of food and drink into the style of her still lifes and flower sketches. Helena explains that one of the benefits of using CycleGAN is that it can work in high resolution even with small datasets.

Another extraordinary author using GAN is Helena Sarin; she is an artist in a more traditional sense who uses GAN to transform and enhance her own pencil-on-paper sketches. Sarin exclusively uses CycleGAN, a variant of GAN that performs the transformation of one image into a new one. Essentially, she trains the network to transform images in the form of one dataset to have the textures of another dataset. For example, she translates her photos of food and drink into the style of her still lifes and flower sketches. Helena explains that one of the benefits of using CycleGAN is that it can work in high resolution even with small datasets.

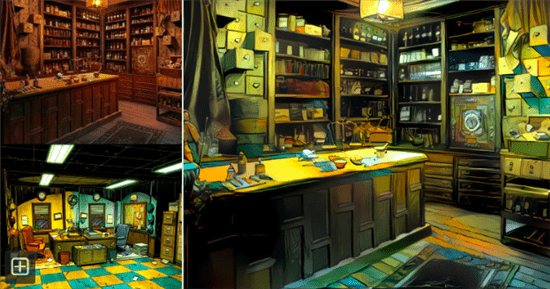

“The image stylization that Helena Sarin uses in her artwork requires artistic taste and talent. Her paintings are a symbiosis of inspiration and a specific, painstakingly tuned neural network. But this technology is gradually becoming accessible to the untrained layman as well. The entry threshold is lowered thanks to the image2image DeepFace technology developed at the Chinese Academy of Sciences.

The model allows one to get a photo as close to a template as possible, based on a non-professional sketch. A library of photographs of human faces is used to train the model. The model itself is an ensemble of two algorithms: a sketch decoder and a generative adversarial neural network (GAN) for matching with the photo. The library of photos, which is fed to the model input, is preconverted into mappings – sketches. The author’s sketch is converted into a vector representation using a decoder.

There is an additional revolutionary option – the transparent shadows of the most appropriate image can be superimposed on the original sketch, allowing the artist to augment it based on typical facial proportions. In this way creativity becomes an iterative process, a symphony of the author and the machine, which requires minimal human training – a hint is enough for the machine.

It can be assumed that in the future the collection of photos in the training network of the model can be stylized as the works of artists and entire trends. Even now, the received photo can be fed to the input CAN (creative adversarial network) with a library of paintings of famous masters, that is, consistently create a stylized canvas based on the simplest sketch.

CAN

Another type of CAN (creative adversarial networks) works on the same principle as the GAN except for one important detail. The discriminator has many classes, each corresponding to a different style – impressionists, surrealists, etc. Thus, the output of the generator is left with stylized images. An example is the painting “Summer Gardens” by the Italian artist Davide Quaiola, presented at the exhibition “Artificial Intelligence and Dialogue of Cultures” in the Hermitage. Davide videotaped flowers that sway in the late afternoon from gusts of wind. It was not the artist but the creative-competitive network that took over from there, transforming the information he received into paintings by French impressionists. At the same time, the palette and movements in the video remain unchanged: the network creates a new painting on top of the raw data.

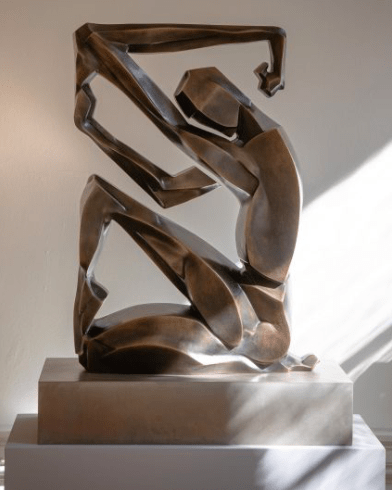

Sculpture

Sculptures created by artificial intelligence are not yet as popular as paintings, but there is still development in this direction. AI is mostly used either to create a GAN layout or directly to develop a three-dimensional model. Scott Ethan’s sculpture debuted at ‘Artist + AI: figures and forms’ and was created in collaboration with artificial intelligence tools. In this case, AI translates drawings into three-dimensional form. Another example is Ben Snell’s ‘Dio’ technology, the essence of which is not disclosed. The teaching set consisted of 1,000 classical sculptures. According to the artist, his main goal was not to make DIO human.

Summary

Neural style transfer, Deep Dream technologies allow creating objects that in many cases do not differ from human creations. Random image generation in CAN technology adds spontaneity to artificial intelligence creativity and allows a step forward from deep styling. Certainly, the gap between AI and humans is narrowing. However, it does not appear to be fully bridged anytime soon, as it is the human who customizes the model, selects the training examples, and uses the technology for creativity.

The idea that machines could be artists, or could even replace artists, as they have already replaced some professions, seems so far too bold.

Artificial intelligence presents extraordinary work tools and an unusual new experimental field for artists in the visual arts and entertainment industries (game design, cinema – CGI, etc.), as well as simplifying and automating routine processes. However, the more automated the process of creating works of art becomes, the higher the value of the idea behind them increases.

Now that the question of execution, physical realization, and the availability of the necessary technical skills is no longer an issue, new ideas are the main driving force in the development of art. And the generation of these ideas is the main function that artificial intelligence cannot (or cannot yet) take away from the creator.