Meta AI, the branch of Facebook’s parent company responsible for artificial intelligence, published a report this week about an exploratory research project it is working on called Make-A-Scene “that demonstrates AI’s potential for empowering anyone to bring their imagination to life,” according to a July 14 blog post.

Make-A-Scene aims to go one step further than the typical text to image generator by adding the option for users to draw a freeform digital sketch of a scene for the network to base its final image on.

“Our model generates an image given a text input and an optional scene layout,” the report reads. “As demonstrated in our experiments, by conditioning over the scene layout, our method provides a new form of implicit controllability, improves structural consistency and quality, and adheres to human preference.”

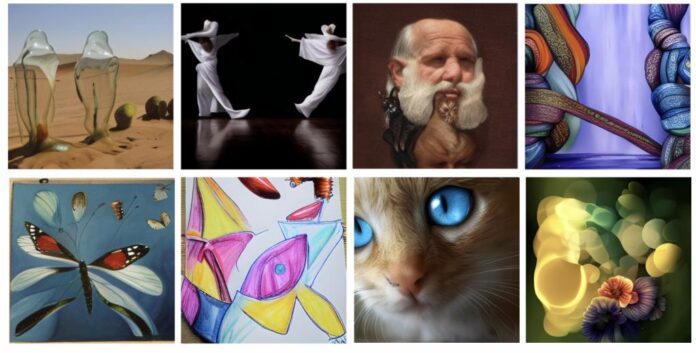

Images generated by Refik Anadol in collaboration with Meta’s Make-A-Scene program. Photo: courtesy of Meta.

Generative art is a longstanding, critical form, though the medium has recently enjoyed a heyday in the mainstream. Many might remember that a work created using AI by the French art collective Obvious fetched $432,500 at Christie’s in 2018. And new media pioneers like Herbert Franke and Refik Anandol have been employing the medium to create works of conceptual depth and poignancy.

“To realize AI’s potential to push creative expression forward, people should be able to shape and control the content a system generates,” Meta AI’s blog post reads. “It should be intuitive and easy to use so people can leverage whatever modes of expression work best for them.”

“Imagine creating beautiful impressionist paintings in compositions you envision without ever picking up a paintbrush,” the post continued. “Or instantly generating imaginative storybook illustrations to accompany the words.”

To fine tune their results, the team at Meta asked human evaluators to assess the images created by the AI program. “Each was shown two images generated by Make-A-Scene: one generated from only a text prompt, and one from both a sketch and a text prompt,” their post says.

Adding the sketch resulted in an image that was better aligned with the text description, 66.3 percent of the time. Meta notes that Make-A-Scene can also “generate its own scene layout with text-only prompts, if that’s what the creator chooses.”

Images generated by Sofia Crespo in collaboration with Meta’s Make-A-Scene program. Photo: courtesy of Meta.

The software hasn’t gone live to the general public. So far, it has only been rolled it out to a few employees and select AI artists, like Sofia Crespo, Scott Eaton, Alexander Reben, and, naturally, Refik Anadol. “I was prompting ideas, mixing and matching different worlds,” Anadol remarked of his experience using the program. “You are literally dipping the brush in the mind of a machine and painting with machine consciousness.”

At the same time, Andy Boyatzis, a program manager at Meta, “used Make-A-Scene to generate art with his young children of ages two and four. They used playful drawings to bring their ideas and imagination to life.”

Since the report was released, Meta has increased the potential resolution of Make-A-Scene’s output four-fold, to 2048 x 2048 pixels. They’ve also promised to provide open access to demos moving forward. For now though, they advise to keep an eye out for more details during their talk at the European Conference on Computer Vision (ECCV) in Tel Aviv this October.