History’s most legendary works of photojournalism have gripped viewers by capturing one emblematic moment that brings to life what might otherwise seem like a remote or implausible event. Would the images have the same emotional charge if those instances of defiance, struggle, and camaraderie weren’t real?

Amnesty International deleted A.I.-generated images from its social media depicting protestors at a demonstration in Colombia after their use was criticized by photojournalists and other online commentators.

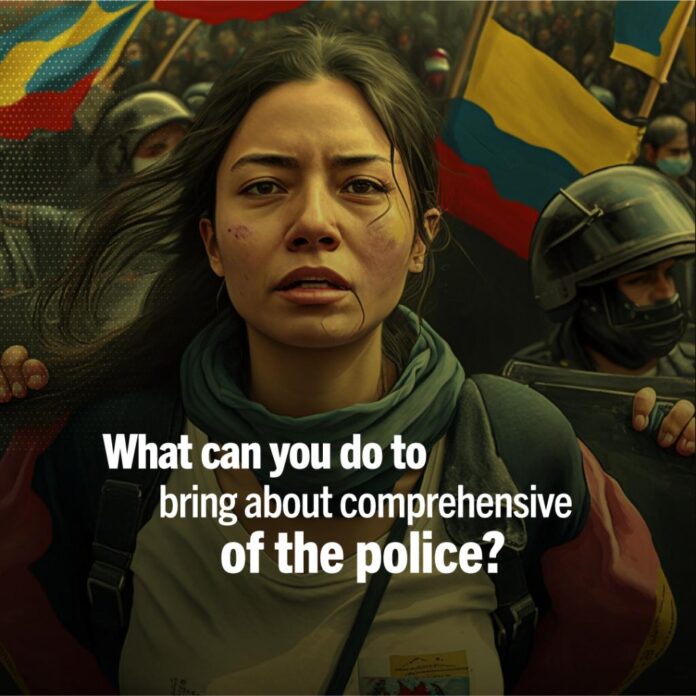

In one image, a woman draped in the colors of the Colombian flag is being forcibly manhandled by police officers in riot gear. At first, it feels almost familiar, recalling the widespread images of police brutality in response to a wave of protests against an unpopular tax reform in the country in 2021. At least 40 people were reported to have been killed by the police, with many more reported missing, according to Colombia’s human rights ombudsman.

On closer inspection, however, the faces in this image are slightly blurred and the colors on the woman’s flag are in the wrong order.

The A.I.-generated image had been used by the human rights organization to promote an online report documenting the actions of the Colombia’s state forces in 2021, which allegedly included abductions, sexual violence, and torture, and calling for reform.

Amnesty International’s campaign for police reform in the Colombia. Photo courtesy of Amnesty International.

Amnesty International said they replaced the photographs that would normally accompany this type of report in order to protect the identities of protestors from retaliation. The images were accompanied by a disclaimer text clearly stating that they were A.I.-generated.

“Many people who participated in the National Strike covered their faces because they were afraid of being subjected to repression and stigmatization by state security forces,” said Amnesty International in a statement shared with Artnet News. “Those who did show their faces are still at risk and some are being criminalized by the Colombian authorities.”

The organization said it consulted with partner organizations in Colombia, and drew the conclusion that if Amnesty real faces of those who took part in the protests were, “it would have put them at risk of reprisal.”

Amnesty International said its “intention was never to create photorealistic images that could be mistaken for real life,” adding that intentionally including “imperfections in the A.I.-generated images was another way to distinguish these images from genuine photographs.”

The organization removed the images from social media. “[w]e don’t want the criticism for the use of A.I.-generated images to distract from the core message in support of the victims and their calls for justice in Colombia,” a director at Amnesty, Erika Guevara Rosas, told

The Bogotá-based photojournalist Juancho Torres, whose work has often been published by , told the paper: “We are living in a highly polarized era full of fake news, which makes people question the credibility of the media. And as we know, artificial intelligence lies. What sort of credibility do you have when you start publishing images created by artificial intelligence?”

Photojournalists may also be concerned about the threat of A.I.-generated images poses to their livelihoods if the use of A.I.-generated stand-ins for real photography were to become commonplace.

“We do take the criticism seriously and want to continue the engagement to ensure we understand better the implications [of using A.I.-generated images] and our role to address the ethical dilemmas posed by the use of such technology,” said Rosas.

More Trending Stories:

Considering a Job in the Art World? Here’s How Much Art Professionals Actually Make for a Living